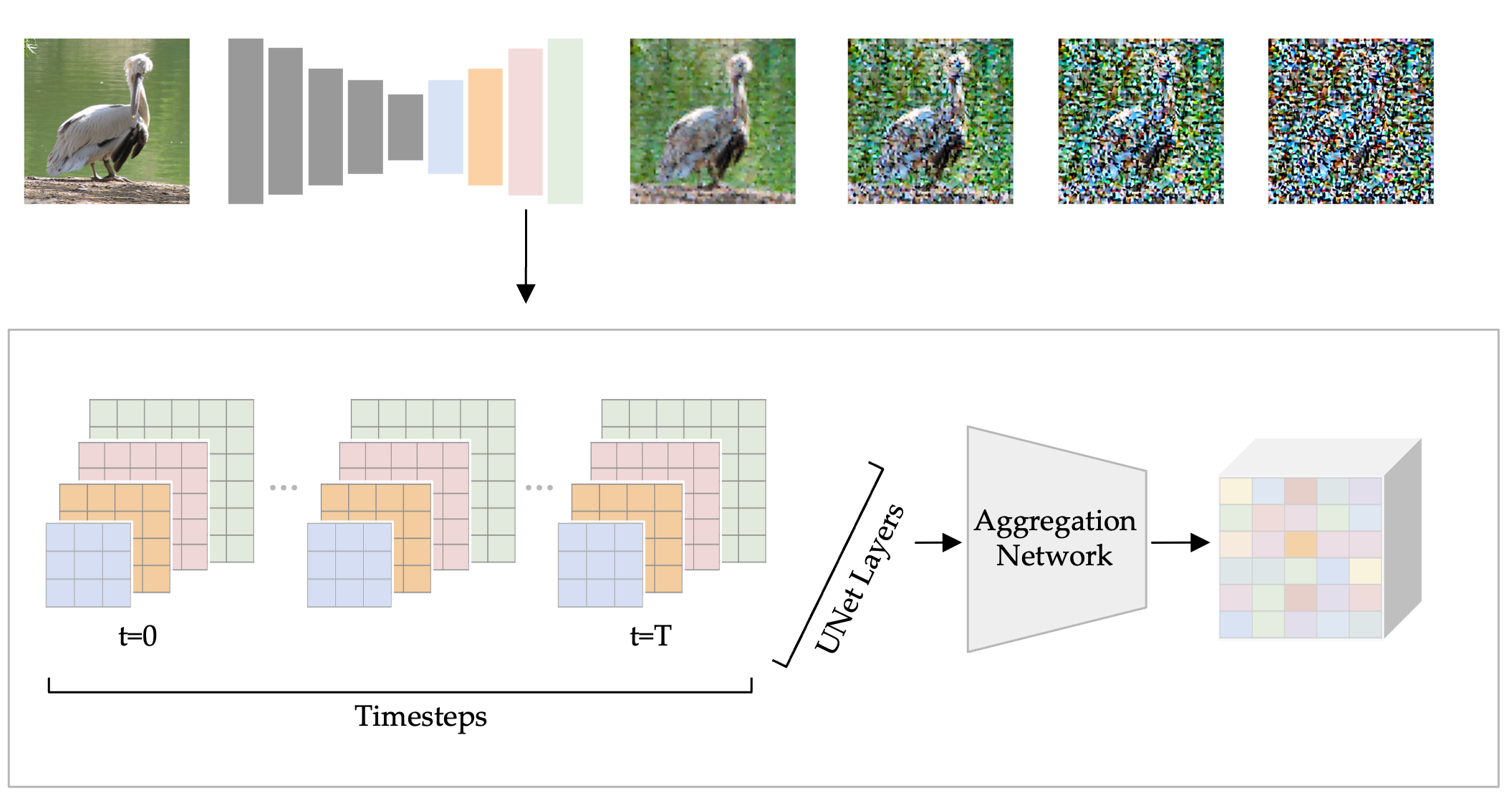

Diffusion Hyperfeatures

We extract feature maps varying across timesteps and layers from the diffusion process and consolidate them with our lightweight aggregation network to create our Diffusion Hyperfeatures, in contrast to prior methods that select a subset of raw diffusion features. For real images, we extract these features from the inversion process, and for synthetic images we extract these features from the generation process. Given a pair of images, we find semantic correspondences by performing nearest neighbors over their Diffusion Hyperfeatures.

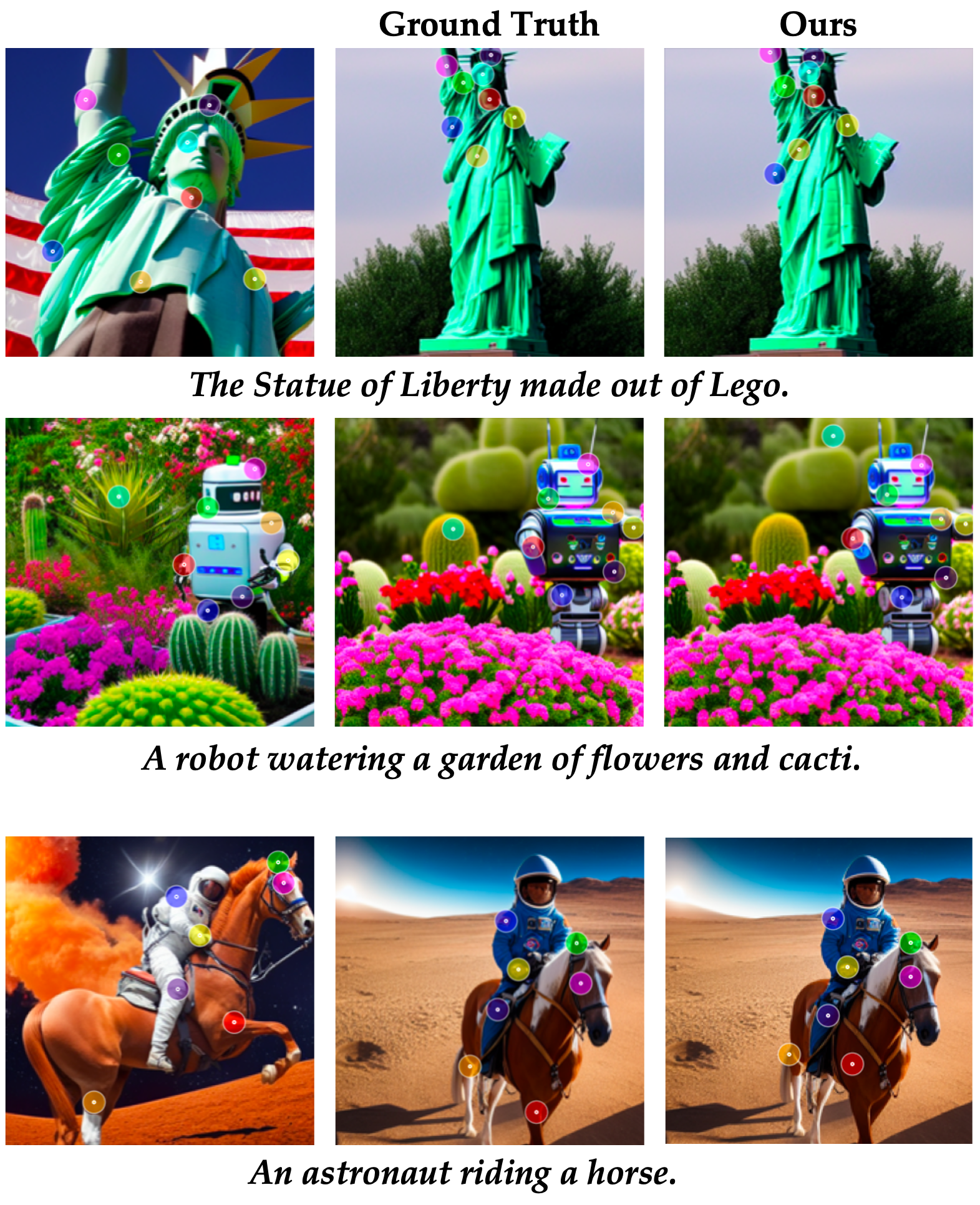

Semantic Keypoint Matching

Tuning on Real Images. We distill Diffusion Hyperfeatures for semantic correspondence by tuning our aggregation network on real images from SPair-71k. The dataset is comprised of image pairs with annotated common semantic keypoints for a variety of object categories spanning vehicles, animals, and household objects.

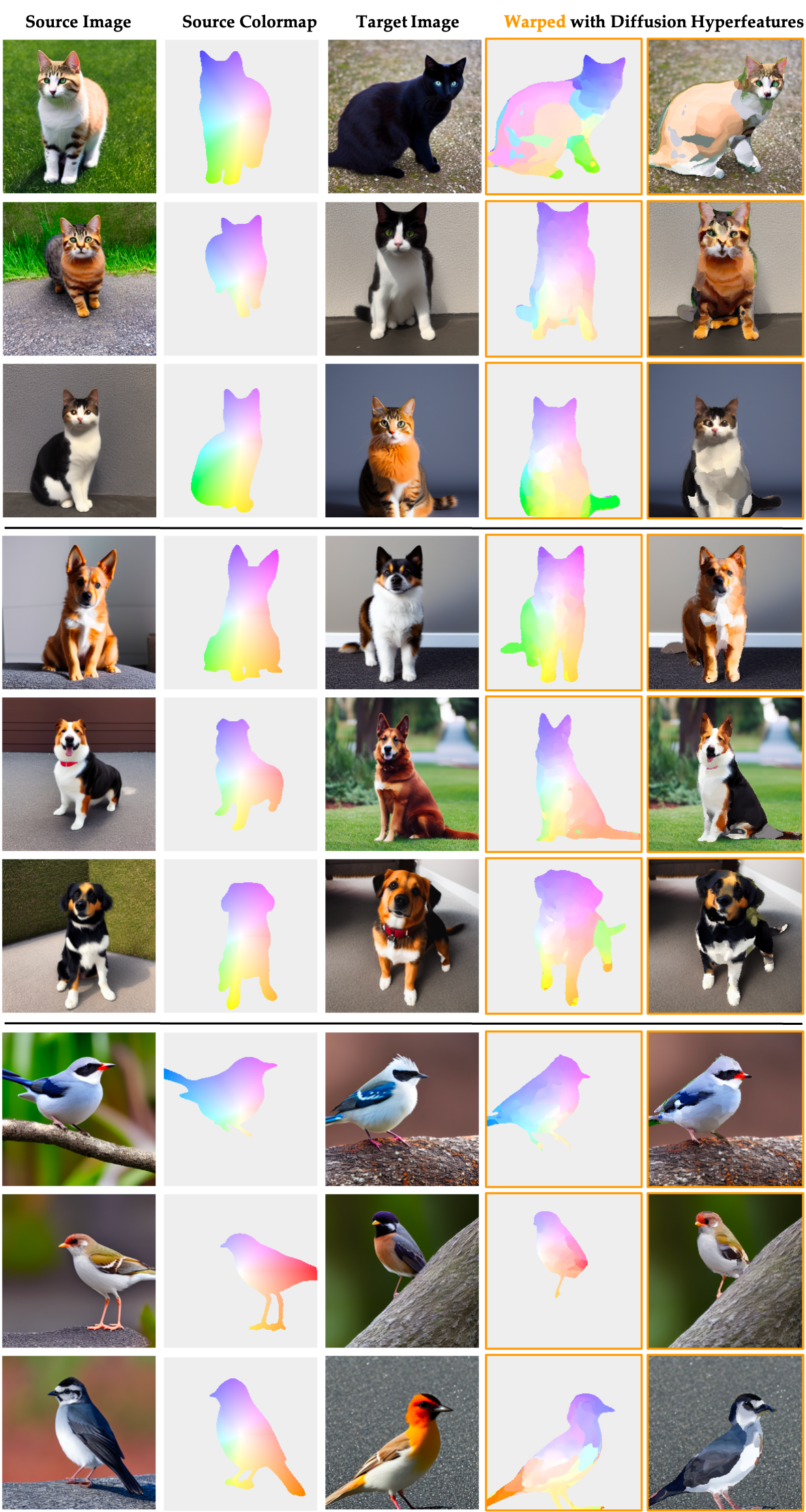

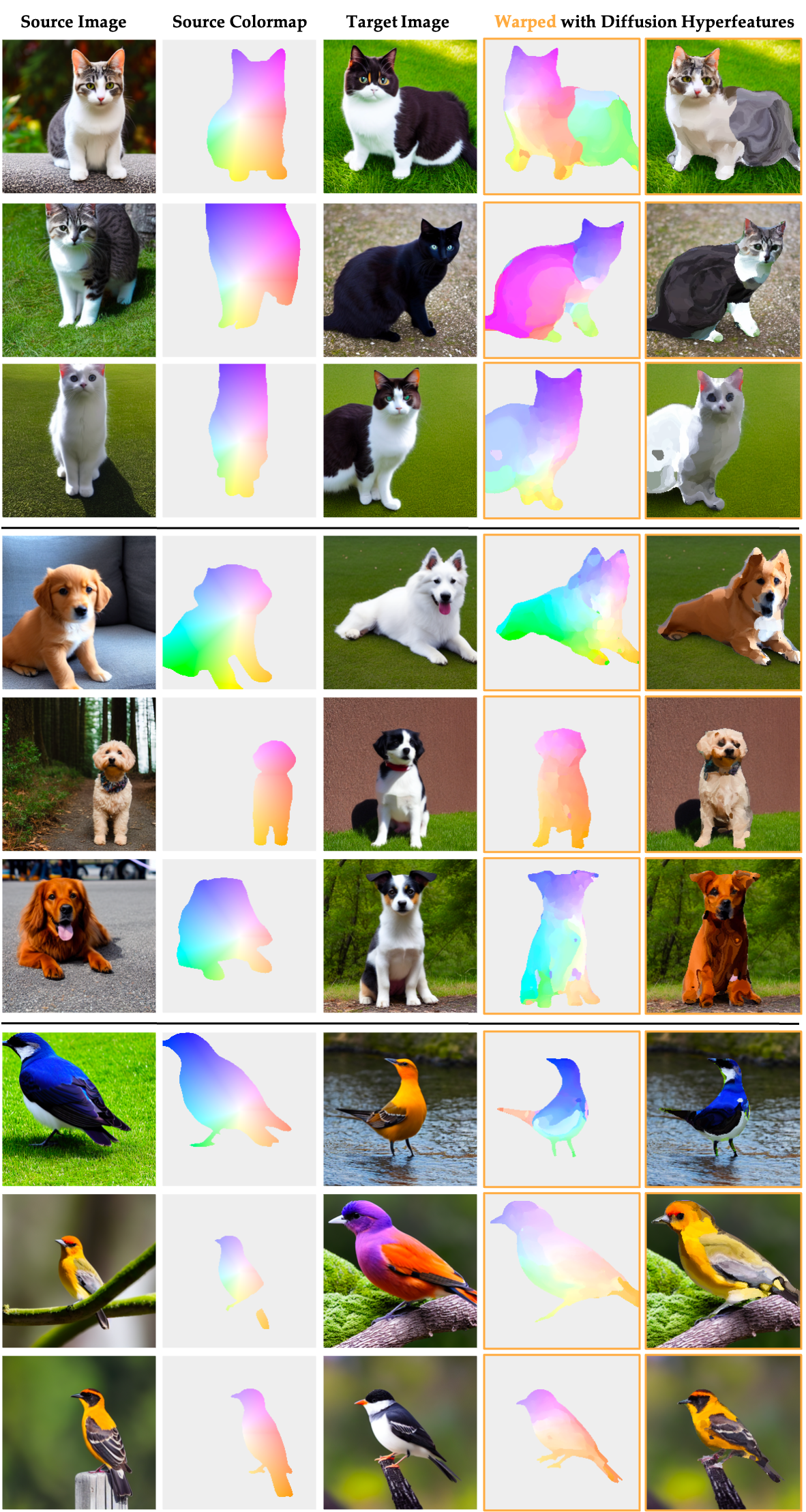

Transfer to Synthetic Images. We can take this same aggregation network tuned on these real images representing a limited set of object categories and apply it to synthetic images containing completely unseen and out-of-domain objects.

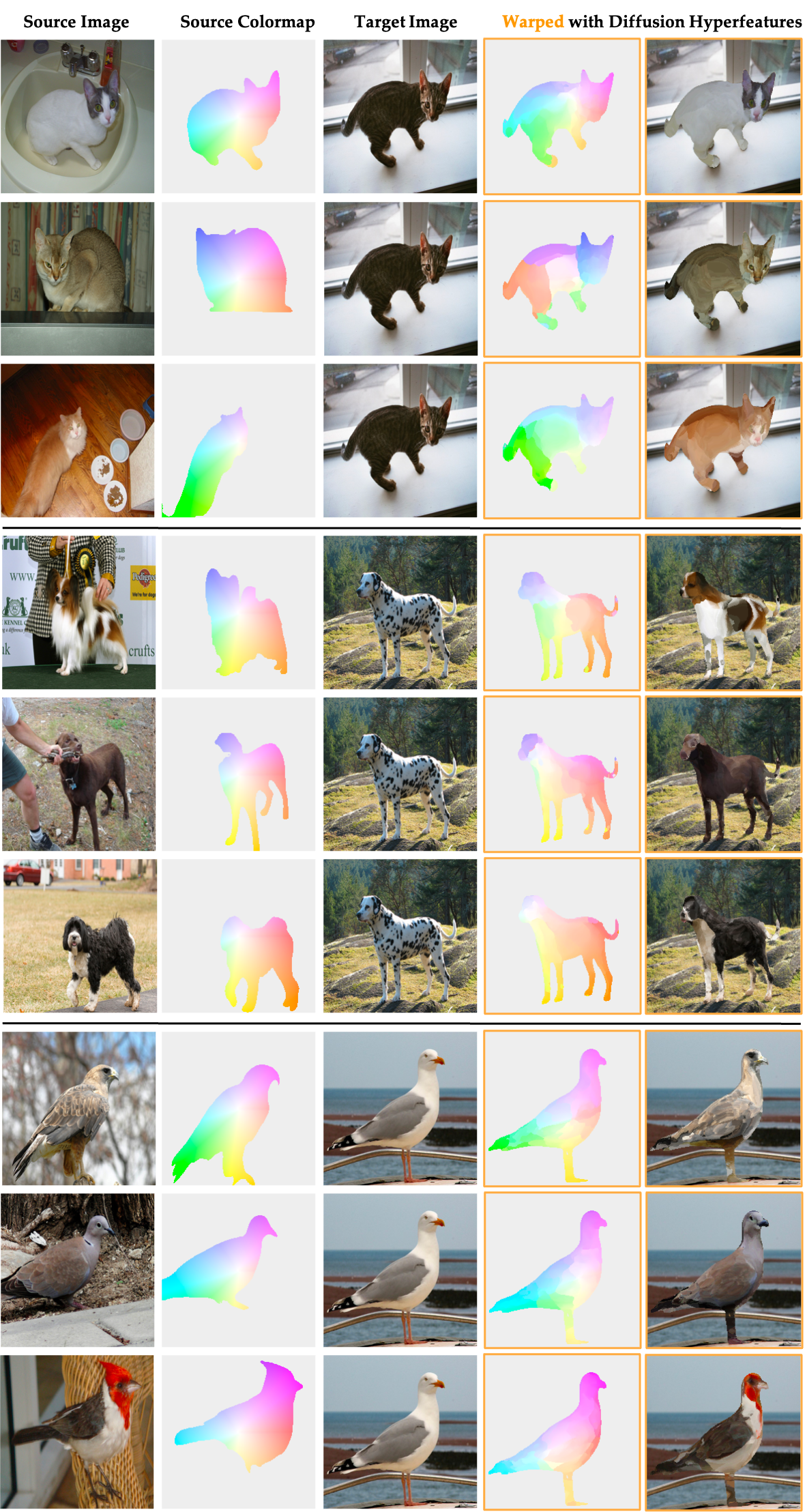

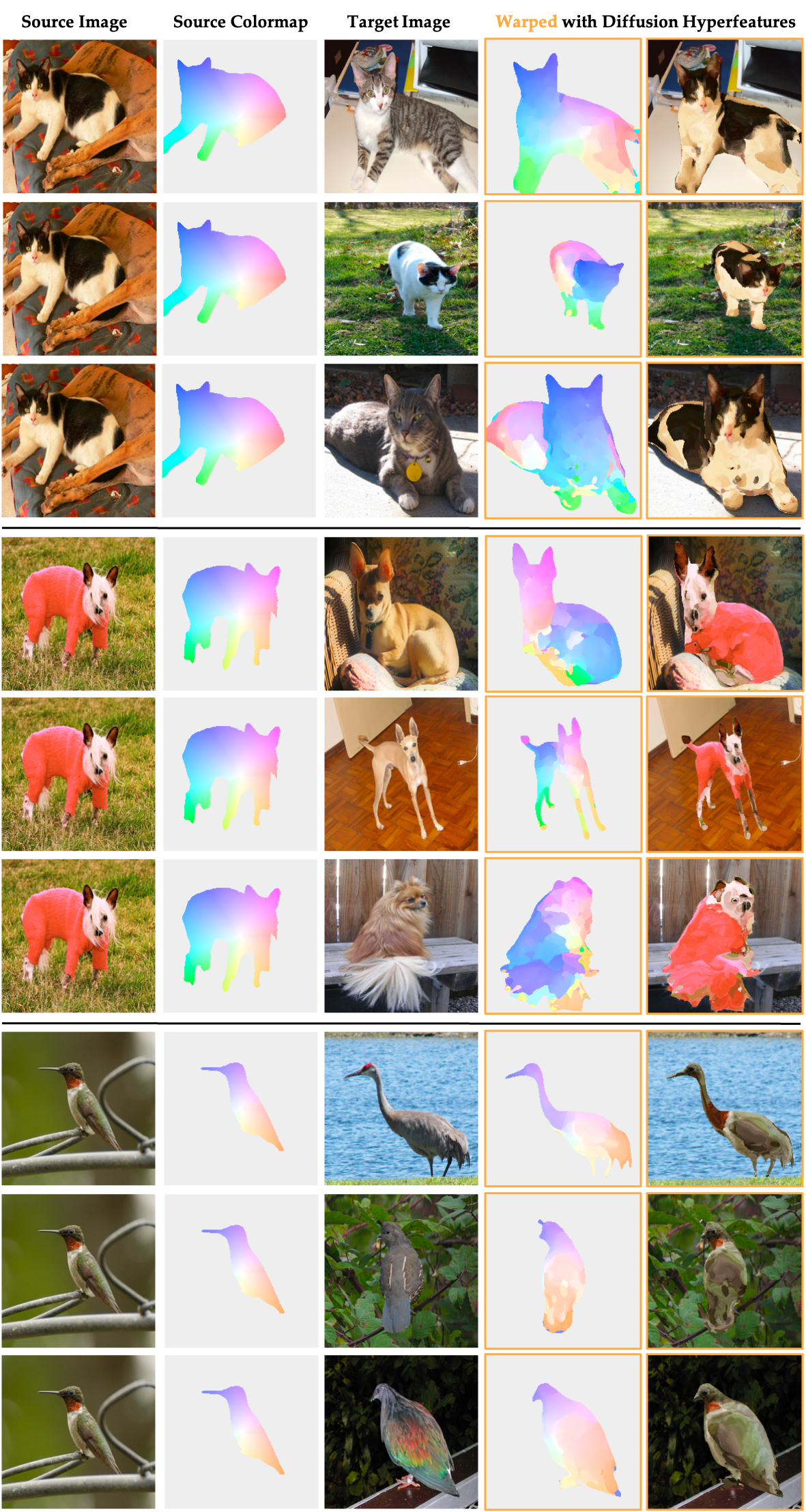

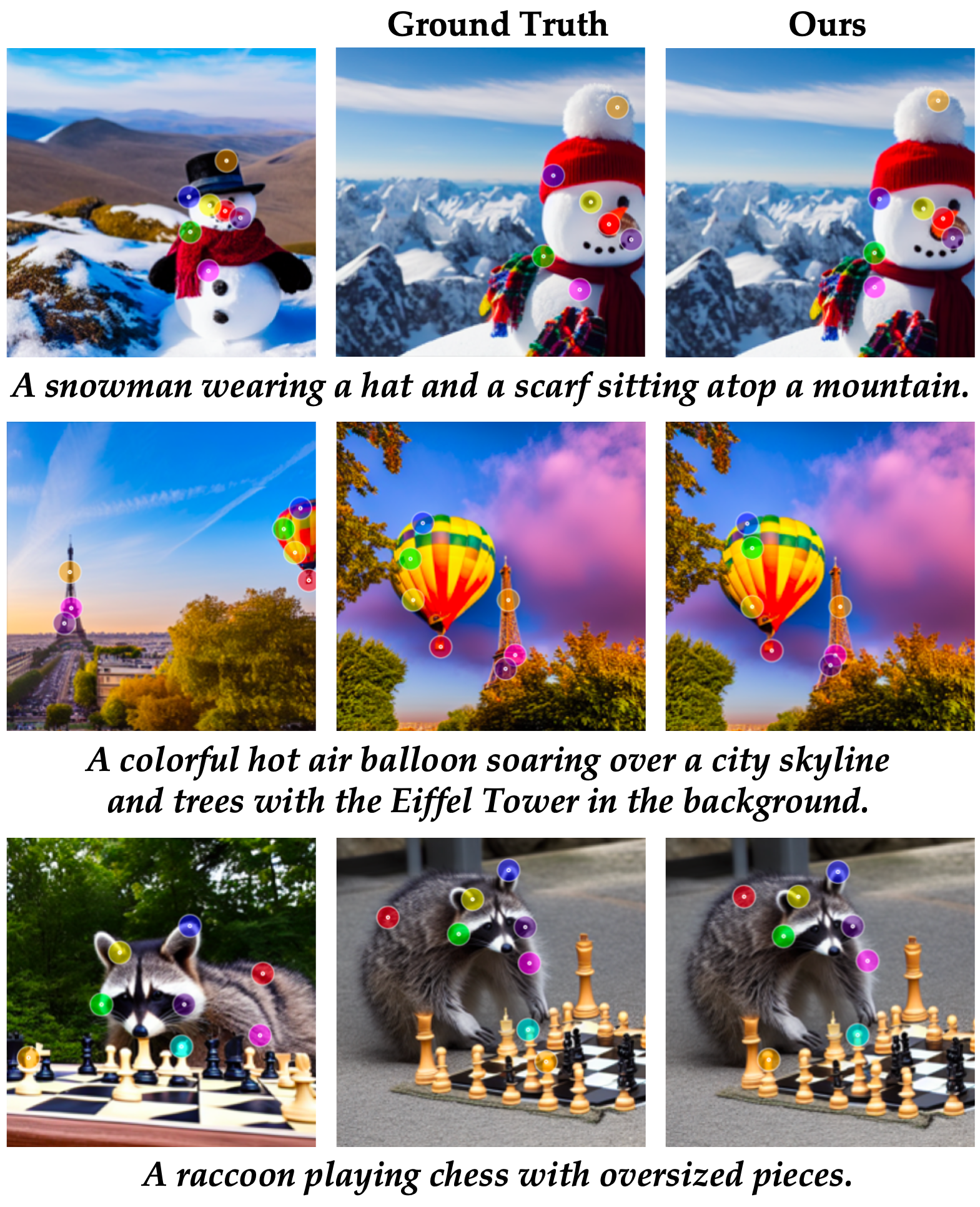

Dense Warping

Our aggregation network, which was trained on the semantic keypoint matching task, can also be used for dense warping. We follow the visualization format from Zhang et. al. (arXiv 2023). Here, we show examples of warps on both real and synthetic images for cat, dog, and bird (where the synthetic images where synthesized from the prompt "Full body photo of {category}."). When warping we compute nearest neighbors matches for all pixels within an object mask, with no visual postprocessing.